15.06.2019 - Anılcan Atik

DeepDream Project¶

DeepDream is an experiment that visualizes the patterns learned by a neural network. Similar to when a child watches clouds and tries to interpret random shapes, DeepDream over-interprets and enhances the patterns it sees in an image.

This individual project for Advanced Machine Learning is based on resources:

This resource contains a minimal implementation of DeepDream, as described in this blog post by Alexander Mordvintsev and mostly these codes are implemented in this project.

It does so by forwarding an image through the network, then calculating the gradient of the image with respect to the activations of a particular layer. The image is then modified to increase these activations, enhancing the patterns seen by the network, and resulting in a dream-like image. This process was dubbed "Inceptionism" (a reference to InceptionNet, and the movie Inception).

I will try to implement this technique to my image.

Library¶

import tensorflow as tf

import numpy as np

import matplotlib as mpl

import IPython.display as display

import PIL.Image

from tensorflow.keras.preprocessing import image

Choosing an image to dream-ify¶

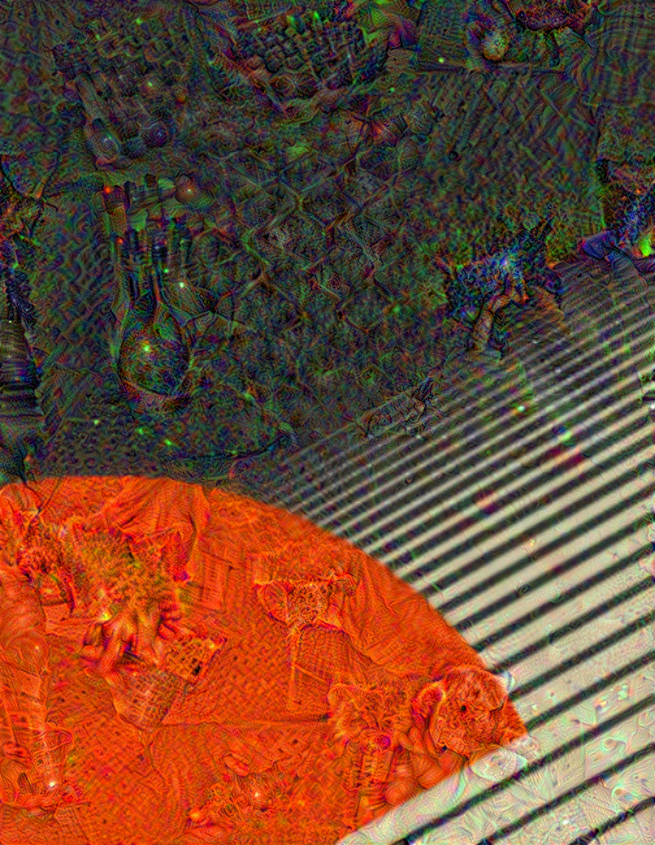

For this tutorial, I'll be using an image of a minimal red planet with rings.¶

url = 'https://i.imgur.com/xMSN3oj.jpg'

# Download an image and read it into a NumPy array.

def download(url, max_dim=None):

name = url.split('/')[-1]

image_path = tf.keras.utils.get_file(name, origin=url)

img = PIL.Image.open(image_path)

if max_dim:

img.thumbnail((max_dim, max_dim))

return np.array(img)

# Normalize an image

def deprocess(img):

img = 255*(img + 1.0)/2.0

return tf.cast(img, tf.uint8)

# Display an image

def show(img):

display.display(PIL.Image.fromarray(np.array(img)))

# Downsizing the image makes it easier to work with.

original_img = download(url, max_dim=500)

show(original_img)

Futuristic and minimalistic elements in this image attracts my attention, and I thought implementing ML algorithms' perspective on it would be a good idea.¶

In this part we download and prepare a pre-trained image classification model.

We will be using InceptionV3.

We will get help from Model feature visualization appendix which gives visual clues about layers of a GoogleNet model, trained on ImageNet images.

base_model = tf.keras.applications.InceptionV3(include_top=False, weights='imagenet')

The idea in DeepDream is to choose a layer (or layers) and maximize the "loss" in a way that the image increasingly "excites" the layers.

By maximizing the "loss" function instead of the conventional minimization of the loss function, we get an image that is increasingly deviated from the original.

Each layer in the DNN model is specialized in a spesific area, choosing different layers would deviate the original photo in a way the layer is specialized.

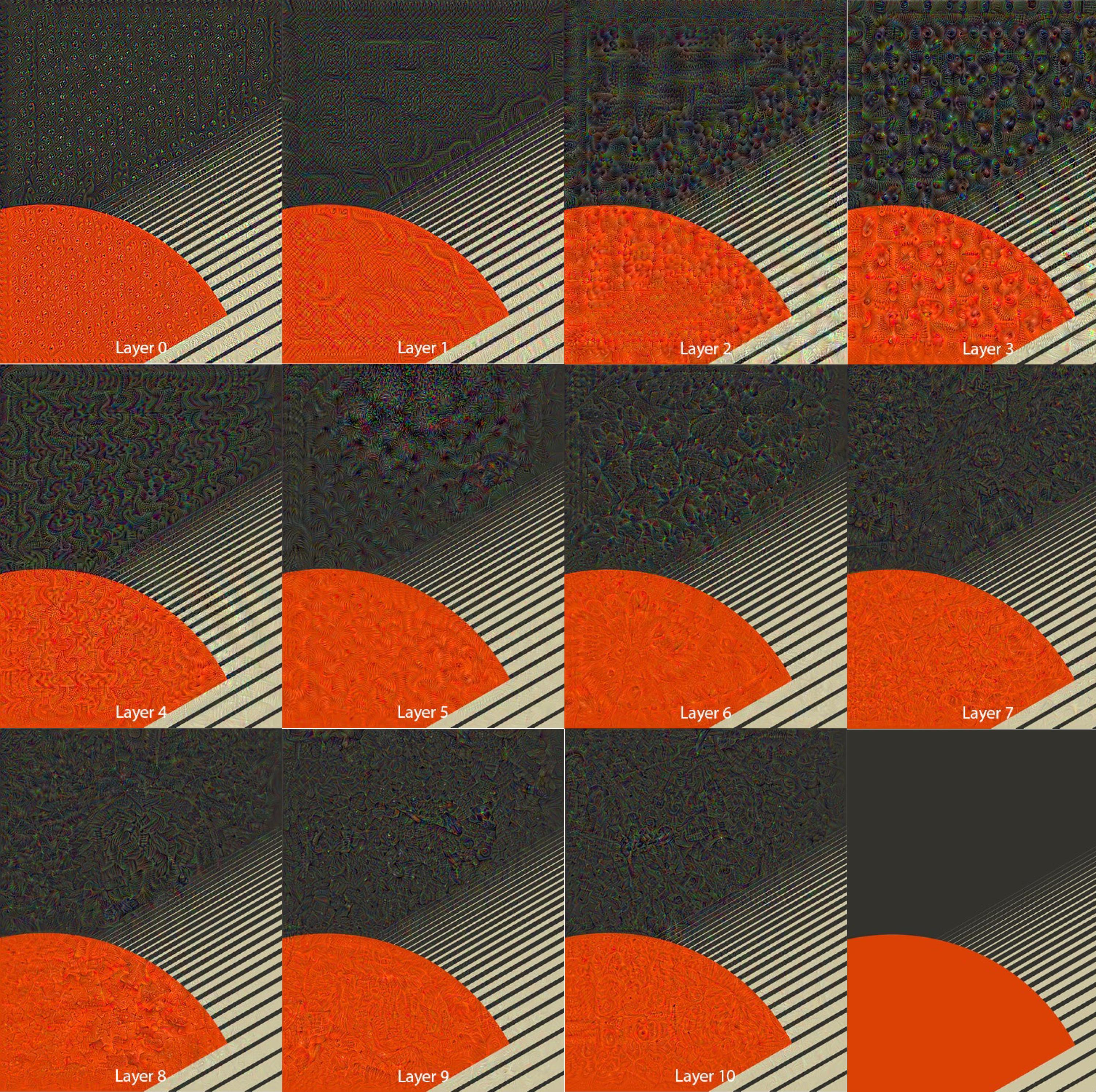

Lower layers tend to produce strokes or simple patterns, while deeper layers give sophisticated features in images, or even whole objects regarding the InceptionV3 model.

The InceptionV3 architecture is quite large (for a graph of the model architecture see TensorFlow's research repo). For DeepDream, the layers of interest are those where the convolutions are concatenated. There are 11 of these layers in InceptionV3, named 'mixed0' though 'mixed10'. Using different layers will result in different dream-like images. Deeper layers respond to higher-level features (such as eyes and faces), while earlier layers respond to simpler features (such as edges, shapes, and textures).

# Maximize the activations of these layers

names = ['mixed8','mixed9']

layers = [base_model.get_layer(name).output for name in names]

print(layers)

# Create the feature extraction model

dream_model = tf.keras.Model(inputs=base_model.input, outputs=layers)

Calculating loss¶

The loss is the sum of the activations in the chosen layers.

We normally try to minimize loss function spesifically in the categorizatiion models to minimize error and improve the success of the model.

The loss is normalized at each layer so the contribution from larger layers does not outweigh smaller layers.

Normally, loss is a quantity you wish to minimize via gradient descent. In DeepDream, you will maximize this loss via gradient ascent.

def calc_loss(img, model):

# Pass forward the image through the model to retrieve the activations.

# Converts the image into a batch of size 1.

img_batch = tf.expand_dims(img, axis=0)

layer_activations = model(img_batch)

if len(layer_activations) == 1:

layer_activations = [layer_activations]

losses = []

for act in layer_activations:

loss = tf.math.reduce_mean(act)

losses.append(loss)

return tf.reduce_sum(losses)

Gradient ascent¶

Once we calculated the loss for the chosen layers, awe need to calculate the gradients with respect to the image, and add them to the original image.

Introducing gradients to the image enhances the patterns seen by the network. At each step, you will have created an image that increasingly excites the activations of certain layers in the network.

The method that does this, below, is wrapped in a tf.function for performance. It uses an input_signature to ensure that the function is not retraced for different image sizes or steps/step_size values. See the Concrete functions guide for details.

class DeepDream(tf.Module):

def __init__(self, model):

self.model = model

@tf.function(

input_signature=(

tf.TensorSpec(shape=[None,None,3], dtype=tf.float32),

tf.TensorSpec(shape=[], dtype=tf.int32),

tf.TensorSpec(shape=[], dtype=tf.float32),)

)

def __call__(self, img, steps, step_size):

print("Tracing")

loss = tf.constant(0.0)

for n in tf.range(steps):

with tf.GradientTape() as tape:

# This needs gradients relative to `img`

# `GradientTape` only watches `tf.Variable`s by default

tape.watch(img)

loss = calc_loss(img, self.model)

# Calculate the gradient of the loss with respect to the pixels of the input image.

gradients = tape.gradient(loss, img)

# Normalize the gradients.

gradients /= tf.math.reduce_std(gradients) + 1e-8

# In gradient ascent, the "loss" is maximized so that the input image increasingly "excites" the layers.

# You can update the image by directly adding the gradients (because they're the same shape!)

img = img + gradients*step_size

img = tf.clip_by_value(img, -1, 1)

return loss, img

deepdream = DeepDream(dream_model)

Main Loop¶

def run_deep_dream_simple(img, steps=100, step_size=0.01):

# Convert from uint8 to the range expected by the model.

img = tf.keras.applications.inception_v3.preprocess_input(img)

img = tf.convert_to_tensor(img)

step_size = tf.convert_to_tensor(step_size)

steps_remaining = steps

step = 0

while steps_remaining:

if steps_remaining>100:

run_steps = tf.constant(100)

else:

run_steps = tf.constant(steps_remaining)

steps_remaining -= run_steps

step += run_steps

loss, img = deepdream(img, run_steps, tf.constant(step_size))

display.clear_output(wait=True)

show(deprocess(img))

print ("Step {}, loss {}".format(step, loss))

result = deprocess(img)

display.clear_output(wait=True)

show(result)

return result

Layers:¶

I am going to implement Layer 8 (mixed8) and Layer 9 (mixed9) in my model.¶

dream_img = run_deep_dream_simple(img=original_img,

steps=100, step_size=0.01)

Scaling up with tiles¶

One thing to consider is that as the image increases in size, so will the time and memory necessary to perform the gradient calculation. The above octave implementation will not work on very large images, or many octaves.

To avoid this issue you can split the image into tiles and compute the gradient for each tile.

Applying random shifts to the image before each tiled computation prevents tile seams from appearing.

Start by implementing the random shift:

def random_roll(img, maxroll):

# Randomly shift the image to avoid tiled boundaries.

shift = tf.random.uniform(shape=[2], minval=-maxroll, maxval=maxroll, dtype=tf.int32)

shift_down, shift_right = shift[0],shift[1]

img_rolled = tf.roll(tf.roll(img, shift_right, axis=1), shift_down, axis=0)

return shift_down, shift_right, img_rolled

shift_down, shift_right, img_rolled = random_roll(np.array(original_img), 512)

show(img_rolled)

class TiledGradients(tf.Module):

def __init__(self, model):

self.model = model

@tf.function(

input_signature=(

tf.TensorSpec(shape=[None,None,3], dtype=tf.float32),

tf.TensorSpec(shape=[], dtype=tf.int32),)

)

def __call__(self, img, tile_size=512):

shift_down, shift_right, img_rolled = random_roll(img, tile_size)

# Initialize the image gradients to zero.

gradients = tf.zeros_like(img_rolled)

# Skip the last tile, unless there's only one tile.

xs = tf.range(0, img_rolled.shape[0], tile_size)[:-1]

if not tf.cast(len(xs), bool):

xs = tf.constant([0])

ys = tf.range(0, img_rolled.shape[1], tile_size)[:-1]

if not tf.cast(len(ys), bool):

ys = tf.constant([0])

for x in xs:

for y in ys:

# Calculate the gradients for this tile.

with tf.GradientTape() as tape:

# This needs gradients relative to `img_rolled`.

# `GradientTape` only watches `tf.Variable`s by default.

tape.watch(img_rolled)

# Extract a tile out of the image.

img_tile = img_rolled[x:x+tile_size, y:y+tile_size]

loss = calc_loss(img_tile, self.model)

# Update the image gradients for this tile.

gradients = gradients + tape.gradient(loss, img_rolled)

# Undo the random shift applied to the image and its gradients.

gradients = tf.roll(tf.roll(gradients, -shift_right, axis=1), -shift_down, axis=0)

# Normalize the gradients.

gradients /= tf.math.reduce_std(gradients) + 1e-8

return gradients

get_tiled_gradients = TiledGradients(dream_model)

Putting this together gives a scalable, octave-aware deepdream implementation:

from PIL import Image

import PIL

def run_deep_dream_with_octaves(img, steps_per_octave=100, step_size=0.01,

octaves=range(-2,3), octave_scale=1.3):

image_no=0

base_shape = tf.shape(img)

img = tf.keras.preprocessing.image.img_to_array(img)

img = tf.keras.applications.inception_v3.preprocess_input(img)

initial_shape = img.shape[:-1]

img = tf.image.resize(img, initial_shape)

for octave in octaves:

# Scale the image based on the octave

new_size = tf.cast(tf.convert_to_tensor(base_shape[:-1]), tf.float32)*(octave_scale**octave)

img = tf.image.resize(img, tf.cast(new_size, tf.int32))

for step in range(steps_per_octave):

gradients = get_tiled_gradients(img)

img = img + gradients*step_size

img = tf.clip_by_value(img, -1, 1)

image_no=image_no+1

if step % 10 == 0:

display.clear_output(wait=True)

dimg=deprocess(img)

show(dimg)

print ("Octave {}, Step {}".format(octave, step))

result = deprocess(img)

return result

img = run_deep_dream_with_octaves(img=original_img, step_size=0.01)

display.clear_output(wait=True)

img = tf.image.resize(img, base_shape)

img = tf.image.convert_image_dtype(img/255.0, dtype=tf.uint8)

show(dimg)